Humanitarian emergencies pose a significant risk to human health. Communicable diseases are one of the primary health concerns in humanitarian emergencies (1-18). The outbreaks of diphtheria and measles among the Rohingya refugees are striking examples of this (19-22).

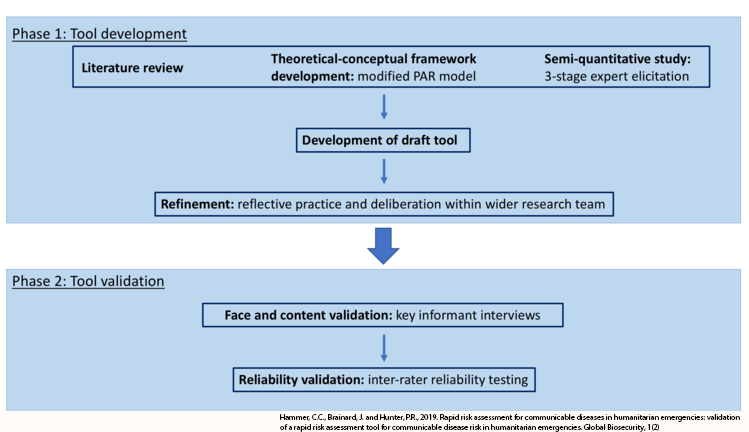

Early identification of at-risk populations is an important step towards not only a better response but also preparedness and prevention of outbreaks, or at least more serious outbreaks. Rapid risk assessment is therefore a priority research area. The aim of this study was to develop and validate an easy-to-use rapid risk assessment tool for communicable diseases in humanitarian emergencies. The tool was designed as part of a larger project to understand vulnerabilities towards communicable disease outbreaks in humanitarian emergencies other than a disease outbreak. The tool development draws on the existing literature on outbreaks in humanitarian crises, a systematic review of the literature on communicable disease risk factors in complex humanitarian emergencies (23), theoretical-conceptual framework development (24), expert elicitation (25) and the validation phase. The results were used to develop a rapid risk assessment tool for communicable diseases in humanitarian emergencies. An early draft was further refined after reflective practice and deliberations with the research team prior to the validation and testing process described in this paper (see Figure 1). The tool captures data on the 20 most critical risk factors that indicate a heightened risk for communicable disease outbreaks in humanitarian emergencies, which fall into three categories: water, sanitation and hygiene (WASH), health and socio-political. Table 1 gives an overview of the risk factors and their sub-factors. Table 2 gives definitions of the main elements of the tool. The tool is accompanied by a guide. The tool presents the results of the risk assessment both numerically – in the form of weighted risk scores – and visually – using a traffic light system. Ideally, the risk assessment should be completed immediately following the onset of an emergency or the set-up of a response operation, within the first 72 hours to 14 days. Subsequent iterations of the assessment should be repeated at regular intervals throughout the response and recovery phases.

Tool development process

Table 1

Risk factors and sub-factors (items) in tool.

| Category | Risk Factors | Sub-factor |

| WASH | Lack of clean water | Not applicable |

| WASH | Lack of toilets | Not applicable |

| WASH | Inadequate distance between housing and human waste disposal | Average distance between housing and human waste disposal |

| Shortest distance between housing and human waste disposal | ||

| WASH | Flooding (waste water) | Not applicable |

| WASH | Lack of waste management | Not applicable |

| WASH | Exposure to disease vectors | Not applicable |

| Health | Lack of health facilities | Access to health facilities |

| Availability of clinics and/or health posts (or other primary care facilities) | ||

| Disease surveillance | ||

| Hospital beds | ||

| Health | Lack of health workers | Doctors |

| Nurses | ||

| Community health care workers | ||

| Health | Insufficient vaccine coverage | Measles |

| Meningococcal disease | ||

| Polio | ||

| Hepatitis B | ||

| Health | Poor health status of the population | Not applicable |

| Health | Lack of medicines | Not applicable |

| Health | Reluctance to follow disease prevention measures | Local health professionals |

| General population | ||

| Health | Insufficient nutrient intake | Not applicable |

| Health | Lack of health and hygiene education | Not applicable |

| Socio-political | Extreme poverty and food insecurity | Not applicable |

| Socio-political | Overcrowding | Not applicable |

| Socio-political | Ongoing conflict | Not applicable |

| Socio-political | Population displacement | Not applicable |

| Socio-political | Lack of organisational and political will to address public health issues | (I)NGOs and donors |

| Local and national government | ||

| Socio-political | Breakdown of government and infrastructure services | Transport |

| Communications | ||

| Education | ||

| Electricity |

Table 2

Main elements of the rapid risk assessment tool (for more detailed descriptions refer to the tool guide in the supplemental files).

| Element | Description |

| Category | Category describes the sphere of the risk factor in question. |

| Risk Factor | The risk factor is the factor being measured; it can have multiple sub-factors. |

| Measure | The measure defines how this risk factor is measured. Measures can be quantitative or qualitative. |

| Answer | The answer is the observed situation, measured either quantitatively or via a selection of options for qualitative factors. |

| Score (0-2) | The score is the quantification and standardisation of the answer (with pre-defined categories for scores of 0; 1; and 2) |

| Weight in this type of emergency (0-4) | The weight is pre-determined by the selection of the emergency type. |

| Weighted Risk Score (0-8) | The weighted risk score is derived from the score reached and the weight of the risk factor in the emergency type (multiplicatory). |

| Overall weighted risk score (0-8) | The overall weighted risk score combines the weighted risk scores for all included risk factors. It works on the same scale as the weighted risk scores. |

The tool differs considerably from previous tools such as the risk assessment matrix described as part of the World Health Organization’s (WHO) early warning systems (EWARN) (26, 27). Our tool does not draw on a qualitative assessment of the riskiness of the individual factors with regard to outbreak risk nor does it provide an overall risk assessment of that, meaning that users are asked to answer questions such as‚ ‘how many liters of clean water are available per person per day?’ and the tool calculates what the answer means in terms of outbreak risk. Thus, this tool enables responders with little or no health protection experience to input secondary or primary data and obtain an evidence-based and objective assessment based on that data. Subjectivity is thereby eliminated from the risk assessment process and the only level of subjectivity remains in the data collection and evaluation thereof, not in the assessment of the consequences of the factors for outbreak risk. This should also reduce the cognitive bias often inherent in risk assessment processes. As such, this tool serves a different purpose than the WHO EWARN risk assessment matrix. For organisations not involved in health protection, the overall weighted risk score can be used to support their overall risk assessment. For organisations involved in health protection or coordination, the individual weighted risk scores can be used to prioritise response, as can the overall weighted risk score if the tool is done for multiple spatial units (e.g. sections of a refugee camp). Overall, the tool can be useful for smaller organisations that do not have extensive health protection portfolios, as well as as a companion for existing well-established mechanisms, such as WHO EWARN or similar mechanisms.

The aim of the research described in this paper – the validation phase – was to test the validity of the content of the tool and its reliability. This was done in a structured process with tiered changes to the tool based on the results of previous work.

The first part of this study was performed remotely with experts from the fields of humanitarian aid and health protection and the second part of the study was performed in the field with volunteer aid workers. The tool that was tested was in English. The aims of this research were to determine the validity of the content of the tool and its inter-rater reliability when used by aid workers with no or limited expertise in health protection. We used mixed methods to ensure robust testing and optimal fulfilment of the aims.

Validity testing was done with key-informant interviews. This was done remotely to include a wide variety of participants from different backgrounds and geographic locations, including persons currently deployed in the field. The reliability testing was done in August 2018 in Thessaloniki, Northern Greece. Thessaloniki remains a hotspot for the response efforts to the European migration crisis and hosts many of the smaller and volunteer-based organisations. We partnered with the InterEuropean Human Aid Association (IHA). IHA started as an entirely volunteer-based organisation in 2015 and has since developed professional recognition. The organisation works with Greek and international partners and provides services to refugees in camps in Northern Greece. However, the reliability testing was not done specifically in the context of the migration crisis as the response effort in Greece is considerably different from other humanitarian emergencies. Rather, volunteers from IHA were involved to test whether the tool was reliable for volunteers with a background in humanitarian aid but not necessarily in medicine or health protection.

For the first part of the study, we invited participants with backgrounds in humanitarian medicine, health protection, disaster and humanitarian studies, and humanitarian aid. We interviewed 25 people. We did not determine the study size a priori as we agree with Sim et al. (28) that a priori determination of the sample size for qualitative key-informant interviews is wrought with problematic issues due to the underlying “questionable philosophical and/or methodological assumptions”. We reached saturation after 20 interviews and conducted another five interviews to confirm. We interviewed 17 people with a health background, five academics and three humanitarian generalists (these represent the primary backgrounds of the participants, several participants fell into multiple groups). Specific practitioner backgrounds included expertise in water, sanitation and hygiene, epidemiology, microbiology, health protection, logistics, clinical medicine and nursing. Apart from academics from institutions in Europe and North America, we interviewed participants from Public Health England, the WHO, Doctors Without Borders, the UK Public Health Rapid Support Team, the United Nations Relief and Works Agency in Jordan and several other organisations. Participants were from Europe, Asia, Africa and North America.

For the reliability testing we invited volunteers from IHA and partner organisations to take part. We conducted adapted focus groups with four groups of two participants each. Participants were from various countries in Europe and all had a background in humanitarian aid, specifically in response in formal and informal refugee camps with varying experience. Participants had no prior training in health protection, epidemiology or risk assessment.

Validity testing covered both content validity testing, defined as the usefulness, appropriateness, relevance and suitability of the tool (29), as well as face validity, defined by the level to which the tool actually measures communicable disease risk and hence a measure of accuracy (30). Participants were given access to the tool. They were asked to comment on the design of the tool, specifically the layout and ease of use, the order and inclusion of risk factors, and the amount of information (i.e. the length of the tool). Additionally, risk factors and their measurements were discussed individually regarding their suitability. The interview concluded with a discussion of the colour-coding system and the interpretation of the results given by the tool. The interviews used a mix of open-ended introductory questions and more detailed further queries. If the participants desired, they were provided with details on the development of the tool and the data sources used. Interviews were performed using an open-ended interview guide and responses were recorded on standardised response sheets. Response sheets were reviewed after each interview and key themes and repeated suggestions for improvement were recorded on a running document.

The tool was designed so that aid workers with little or no experience in health protection would be able to consistently fill in the tool with the right information. This was assessed by a reliability testing with two fictitious scenarios (31). Participants filled in the tool for both scenarios – one a displacement crisis set in a refugee camp and the other a response to an earthquake. They had access to the tool, the tool guide and a scenario description. The tool was completed in pairs and inter-rater reliability testing was done based on the filled-in tool documents provided by the participants. Scenarios were modelled after real emergencies (mainly by combining aspects of different past emergencies) and authentically reflected situations with incomplete information. Scenarios were provided in written form. There was no time limit for participants to complete the tool but times to completion were recorded for each group and scenario. Analysis was done against an answer sheet and agreement with the answer sheet was recorded for the overall weighted risk score as well as line-by-line for each individual risk factor and sub-factor. Accuracy was determined as inter-rater reliability when tested against the answer sheet. Any answer that was not in line with the answer sheet was considered a mistake, even if it consisted of a blank. We recognise that there are some answers that could be contested, hence our aim for 70% agreement with the answer sheet and not higher. Potentially contestable answers were those that required a qualitative judgement of the situation. After completion of the two scenarios, all groups came together as one focus group to discuss the ease of use of the tool. Results of this discussion were recorded in the form of field notes and considered in the final changes made to the tool.

Changes to the tool were made after each phase of the testing. After analysis of the reliability testing, the tool was finalised and is included as Supplementary Files 1 (digital) and 2 (print). The guide is available as Supplementary File 3. The two scenarios are available as Supplementary Files 4 and 5.

Participants generally found the tool useful, comprehensible and accurate (24/25). Positive feedback was given for the inclusion of both a print and a digital version of the tool. Those who suggested the inclusion of other risk factors agreed that the 20 included were suitable after discussion of the data sources and evidence upon which the design of the tool was based. Suggestions for changes made by the participants included issues regarding the layout and design of the tool, the measurement of qualitative risk factors and the breakdown of risk factors into sub-factors. Changes were incorporated in subsequent iterations of the tool.

Specific changes were made based on the face and content validation. The risk factor ‘health facilities’, which initially only included hospital beds, was extended to include primary care facilities, access to health care and disease surveillance. For several risk factors, responses were broken down by groups. For example, ‘lack of political and organisational will to address public health issues’, was split into the groups ‘local and national government’ and ‘non-governmental organisations and donors’. A risk factor concerning government services was clarified into government and infrastructure services and broken down into roads/transport, communications, electricity and education. Minor changes were made to the wording of some risk factors. Additionally, for the digital version, a tab with a mock filled-in version was added to give users a visual example and risk factors were sorted into clusters based on suggestions from participants. Participants also provided detailed input into issues to be included in the accompanying guide, including the use of a smaller spatial scale where possible and the need to re-do the assessment if significant changes to the situation have occurred.

Inter-rater reliability testing was done based on the completed tools provided by all groups for both scenarios (n=8). The time that the participants needed to complete the tool decreased with the second scenario, with the average time for completion being 33 minutes and 1 second for scenario 1 and 13 minutes and 15 seconds for scenario 2 (see Table 3). This leads to the assumption that the tool is easier to use once participants have some experience with it. Discussions with the participants confirmed this assumption.

The aim of the analysis of the completed tools was to test whether participants with little or no experience with health protection could fill in the tool and reach accuracy levels of 70% or greater. Participants without prior health protection experience were able to fill in the tool with an accuracy of 81.25% (SD 4.08) across both scenarios (82.35% and 80.15% for scenarios 1 and 2 respectively). Answers that required a qualitative judgement of the situation were the most likely to be answered wrongly.

For 50% of the items, 100% of the groups gave the correct answers. For 11 items (out of 34 items in total) three groups gave the correct answer (75%). The final six items were correctly completed by less than three groups (50% or lower). Quantitative items were generally more likely to have been identified correctly (8 completely correct answers out of 14 quantitative items versus 11 completely correct answers out of 20 qualitative items).

Table 4

Item and group validation for scenario 1 (line-by-line and group-by-group comparison of the answers of all four groups for scenario one in comparison with the answer sheet); for each item a score of 0, 1 or 2 may be given, N/A refers to items with no score assigned (i.e. not answered) by the respective group.

| Risk Factor | Type | Answer Sheet (score) | Group 1 (score) | Group 2 (score) | Group 3 (score) | Group 4 (score) | Number correct | % Correct |

| 1 | quant | 0 | 0 | 0 | 0 | 0 | 4 | 100 |

| 2 | quant | 2 | 2 | 2 | 2 | 2 | 4 | 100 |

| 3a | quant | 1 | 1 | 1 | N/A | 1 | 3 | 75 |

| 3b | quant | 1 | 1 | 1 | 1 | 1 | 4 | 100 |

| 4 | qual | 0 | 0 | 0 | 0 | 0 | 4 | 100 |

| 5 | qual | 1 | 1 | 1 | 1 | 1 | 4 | 100 |

| 6 | qual | 1 | 2 | 1 | 1 | 1 | 3 | 75 |

| 7a | qual | 0 | 2 | 1 | 1 | 0 | 1 | 25 |

| 7b | qual | 0 | 2 | 0 | 1 | 0 | 2 | 50 |

| 7c | qual | N/A | N/A | N/A | 1 | 1 | 2 | 50 |

| 7d | quant | 1 | 1 | 1 | 1 | 2 | 3 | 75 |

| 8a | quant | 0 | 0 | 0 | 0 | 1 | 3 | 75 |

| 8b | quant | 0 | 0 | 0 | 0 | 2 | 3 | 75 |

| 8c | quant | 1 | 1 | 1 | 1 | 2 | 3 | 75 |

| 9a | quant | 0 | 0 | 0 | 0 | 0 | 4 | 100 |

| 9b | quant | N/A | N/A | N/A | N/A | N/A | 4 | 100 |

| 9c | quant | N/A | N/A | N/A | N/A | N/A | 4 | 100 |

| 9d | quant | N/A | N/A | N/A | N/A | N/A | 4 | 100 |

| 10 | qual | 1 | 1 | 1 | N/A | 1 | 3 | 75 |

| 11 | qual | 1 | 1 | 1 | 1 | 1 | 4 | 100 |

| 12a | qual | N/A | N/A | N/A | N/A | N/A | 4 | 100 |

| 12b | qual | N/A | N/A | N/A | N/A | N/A | 4 | 100 |

| 13 | qual | 0 | 0 | 0 | 0 | 0 | 4 | 100 |

| 14 | qual | 0 | 2 | N/A | 1 | 1 | 0 | 0 |

| 15 | quant | N/A | N/A | N/A | N/A | N/A | 4 | 100 |

| 16 | quant | 0 | 0 | 1 | 0 | 0 | 3 | 75 |

| 17 | qual | 1 | 1 | 1 | 1 | 1 | 4 | 100 |

| 18 | qual | 2 | 2 | N/A | N/A | 2 | 2 | 50 |

| 19a | qual | 1 | 1 | 0 | 1 | 1 | 3 | 75 |

| 19b | qual | 1 | 1 | 0 | 0 | 0 | 3 | 75 |

| 20a | qual | 0 | 0 | 0 | 0 | 0 | 4 | 100 |

| 20b | qual | 1 | 1 | 1 | 2 | 1 | 3 | 75 |

| 20c | qual | N/A | N/A | N/A | N/A | N/A | 4 | 100 |

| 20d | qual | 1 | 1 | 1 | 1 | 1 | 4 | 100 |

| Correct | 34 | 29 |

29 | 26 | 28 | |||

| % Correct | 100 | 85.29 |

85.29 | 76.47 |

82.35 |

82.35 |

58.82% of items were correctly answered by 100% of participants. 17.65% were correctly answered by three groups. Half the groups or less answered the remaining 23.53% (eight items) correctly. As in scenario 1, quantitative items were more likely to be answered correctly (92.86% of quantitative items answered correctly by all groups versus 35% of qualitative items answered correctly by all groups).

Table 5

Item and group validation for scenario 2 (line-by-line and group-by-group comparison of the answers of all four groups for scenario two in comparison with the answer sheet); for each item a score of 0, 1 or 2 may be given, N/A refers to items with no score assigned (i.e. not answered) by the respective group.

| Risk Factor | Type | Answer Sheet (score) | Group 1 (score) | Group 2 (score) | Group 3 (score) | Group 4 (score) | Number correct | % Correct |

| 1 | quant | 1 | 1 | 1 | 1 | 1 | 4 | 100 |

| 2 | quant | 2 | 2 | 2 | 2 | 2 | 4 | 100 |

| 3a | quant | N/A | N/A | N/A | N/A | N/A | 4 | 100 |

| 3b | quant | N/A | N/A | N/A | N/A | N/A | 4 | 100 |

| 4 | qual | N/A | N/A | N/A | N/A | N/A | 4 | 100 |

| 5 | qual | 2 | 2 | 2 | N/A | 2 | 3 | 75 |

| 6 | qual | N/A | N/A | N/A | N/A | N/A | 4 | 100 |

| 7a | qual | 2 | 2 | 1 | 2 | 1 | 2 | 50 |

| 7b | qual | N/A | N/A | 2 | 2 | 1 | 1 | 25 |

| 7c | qual | 2 | 2 | N/A | 2 | 2 | 3 | 75 |

| 7d | quant | 2 | 2 | 2 | 2 | 2 | 4 | 100 |

| 8a | quant | 2 | 2 | 2 | 2 | 2 | 4 | 100 |

| 8b | quant | 2 | 2 | 2 | 2 | 2 | 4 | 100 |

| 8c | quant | N/A | N/A | N/A | N/A | N/A | 4 | 100 |

| 9a | quant | 0 | 0 | 0 | 0 | 0 | 4 | 100 |

| 9b | quant | N/A | N/A | N/A | N/A | N/A | 4 | 100 |

| 9c | quant | 0 | 0 | 0 | 0 | 0 | 4 | 100 |

| 9d | quant | N/A | N/A | N/A | N/A | N/A | 4 | 100 |

| 10 | qual | 0 | 0 | 0 | 2 | 1 | 2 | 50 |

| 11 | qual | N/A | N/A | 2 | 2 | 1 | 1 | 25 |

| 12a | qual | N/A | N/A | N/A | N/A | N/A | 4 | 100 |

| 12b | qual | N/A | N/A | N/A | N/A | N/A | 4 | 100 |

| 13 | qual | N/A | N/A | N/A | 2 | N/A | 3 | 75 |

| 14 | qual | 2 | N/A | 0 | 2 | N/A | 1 | 25 |

| 15 | quant | 2 | N/A | N/A | 2 | N/A | 1 | 25 |

| 16 | quant | N/A | N/A | N/A | N/A | N/A | 4 | 100 |

| 17 | qual | 0 | N/A | 0 | 2 | N/A | 1 | 25 |

| 18 | qual | N/A | N/A | N/A | N/A | 2 | 3 | 75 |

| 19a | qual | N/A | N/A | N/A | N/A | N/A | 4 | 100 |

| 19b | qual | N/A | N/A | N/A | N/A | N/A | 4 | 100 |

| 20a | qual | 2 | 2 | 2 | 2 | 2 | 4 | 100 |

| 20b | qual | 2 | 2 | N/A | 2 | 2 | 3 | 75 |

| 20c | qual | 0 | 0 | 2 | 0 | N/A | 2 | 50 |

| 20d | qual | 2 | 2 | N/A | 2 | 2 | 3 | 75 |

| Correct | 34 | 31 | 25 | 28 | 25 | |||

| % Correct | 100 | 91.18 | 73.53 | 82.35 | 73.53 | 80.15 |

Additional to the line-by-line and group-by-group analysis, the overall risk scores were compared with the overall risk score ascertained with the answer sheets (see Table 6).

This study successfully validated a newly developed tool to rapidly assess communicable disease risks in humanitarian emergencies. The results of the validity and reliability testing suggest that the tool is useful and appropriate for aid workers with and without training in health protection to rapidly assess the risk that communicable diseases pose in the context of their field deployment. While reliability testing was done in Greece, no part of the study was specific to one singular context and the tool is suitable for all types of humanitarian emergencies that are not caused by the outbreak of a communicable disease. This tool can be used in the following settings: conflicts and war zones, floods, tropical storms and other hydro-metrological disasters, geo-disasters such as earthquakes, complex emergencies, famines, tsunamis, protracted crises and displacement crises with displacement into refugee and similar camps.

The participants’ feedback on the tool was positive and reflected both face and content validity. They determined the tool easy to use and remarked that for those risk factors that are not quantitative, an element of subjectivity remains, but that this is limited with the detailed descriptions in the tool guide. Based on the feedback from the participants the tool was modified.

This tool can only be as reliable as the data that is used to fill it in. If data is missing the tool can be used with an incomplete data set; however, that can potentially lead to an inaccurate representation of the actual risk. Alternatively, additional data can be collected on the missing factors and sub-factors. While the tool is mainly designed to draw on already existing data, a suitable method for additional data collection would be cross-sectional surveys with random or cluster random sampling.

The results from the evaluation of the completed tools for the two scenarios show that aid workers with little or no experience in health protection, risk assessment or epidemiology can successfully and adequately use this tool to assess the risk of a communicable disease outbreak in different types of emergencies. We agreed prior to field validation that a reliability of 70% or greater was considered suitable for considering the tool reliable in the field, especially if the main source of error was to be qualitative assessments. We acknowledge that some of the qualitative items are open for debate and as such the answer sheet is not as directive for them as it is for the quantitative items. Hence, we consider these results to show the adequateness of the tool.

While the overall weighted risk scores that the participants calculated were higher than those from the answer sheet, they were close enough to consider the tool adequate. In relation to the overall weighted risk scores, we considered adequateness to be achieved if the overall weighted risk score of the answer sheet lay within one standard deviation of the mean overall weighted risk score achieved by the participants. This was the case for both scenarios (see Table 6). Additionally, the scores achieved by the participants were generally higher than the overall weighted risk score assumed based on the answer sheet and, as we consider erring on the side of caution to be advisable, any discrepancies between the answer sheet and the participants’ answers are particularly unproblematic is in this context.

Based on the reduction in time for completion from scenario 1 to scenario 2, we assume that repeatedly working with the tool will increase the ease of use and the time needed to complete it. Familiarity with the tool does not seem to increase the accuracy. However, we assume that two scenarios are too few to make any substantial comments on the likelihood that repeated use of the tool makes an individual or group more accurate when using it.

There are several limitations to this study, as well as to the tool itself. The tool is only suitable for certain emergency types. Due to the conceptual basis of the tool, it is not suitable for any emergency in which an outbreak of an infectious disease constitutes the humanitarian emergency, such as the 2014 West Africa Ebola outbreak. Additionally, the tool does not have a specific emergency type for entrapment crises or displacement crises where most of the displaced population(s) is displaced into urban and non-camp settings. Should such emergencies occur in a situation where one of the other emergency types – most likely conflict – also apply, this is the emergency type that is the most suitable, which will be the case for most if not all entrapment crises. However, should urban/non-camp displacement be too far removed from the original cause of the displacement, this tool is not suitable. The decision to not include such situations was made based on the comparably limited evidence base for such situations and the authors call for more primary research into health needs and communicable diseases in both entrapment crises and displacements into urban/non-camp settings.

As this tool focuses on the 20 most critical risk factors for communicable disease outbreaks in humanitarian emergencies, we expect that some users will feel that important issues are missing. Risk factors were selected based solely on their ability to be a reliable indicator of risk and not on any other considerations. Issues like sexual and reproductive health, as well as the protection of vulnerable groups, are extremely important in humanitarian emergencies. They are not included in this tool because they are not among the best indicators of disease outbreak risk – not because they are not important.

The scores calculated by the tool will be less reliable in situations of considerable inequity. In such situations, smaller spatial units should be used. If measures are not distributed equitably, their reliability and the reliability of the overall score is questionable.

While the study included systematic validity and reliability testing, the results and hence the suitability and value-added of the tool will only be entirely clear after the tool has been used in the field for a longer time and in multiple occasions. Such a test was not within the scope of this study. We hope that over the next months and years, organisations and individuals involved in the response to humanitarian emergencies will make use of the tool either on its own or alongside other risk assessment procedures and we would welcome any feedback any organisations using the tool would be willing to provide. The small sample size of the inter-rater reliability test is another limitation. However, the consistency of the answers and especially of the qualitative data obtained suggests that despite the small sample size the results are reliable. Finally, the majority of our participants represent (international) response organisations rather than host countries and affected populations.

We attempted to develop a rapid risk assessment tool for communicable diseases in humanitarian emergencies that gave both quantitative and qualitative indications of risk level and could be used by aid workers with little or no training in health protection.

The tool works as an initial assessment tool and is applicable across a large range of different settings.

In some cases, specialised organisations might want to have a more focused risk assessment only looking at their own area. Thus, one hurdle is that the tool is interdisciplinary and it may be difficult to convince organisations that the tool is suitable and useful at first instance even if they have their own more detailed and subject-specific assessments. This applies particularly to highly specialised organisations. However, one of the main groups this tool is aimed at are smaller organisations that do not have extensive health protection portfolios. For those organisations, this tool can be empowering when used together with an initial needs assessment to understand priority areas for action both within and beyond their own scope.

In the context of larger – especially country-level – responses, we do not see this tool as a substitute or in competition with well-established mechanisms such as the WHO’s EWARN (26, 27). Rather it is a companion that seeks to quantify outbreak risk (to a degree) and make rapid risk assessment for communicable diseases in humanitarian emergencies possible without the expert knowledge necessary to complete a qualitative risk assessment. Additionally, the results of the tool can shed light on how the wider humanitarian crisis is affecting health outcomes in the context it is completed in.

In addition to the digital and print versions of the tool, it is possible to develop the tool into an app for mobile devices such as tablets and smartphones. The tool can be used as a general rapid risk assessment tool that users can become highly familiar with across different deployments, as well as be adapted for specific circumstances and settings. The tool and the accompanying guide are available in the public domain and the authors are available for any questions regarding the use and adaptation of the tool. We would also welcome any feedback from organisations adopting the tool as part of their rapid risk assessment.

Supplemental information as referenced in the text (download).

The research study has been approved under the regulations of the University of East Anglia’s Faculty of Health and Medicine Ethics Committee. All participants provided written informed consent prior to participating.

The authors declare no competing interests.

All researchers helped design the study and provided input for the tool. CH designed the tool and programmed changes based on the testing. CH did the interviews, conducted the field investigation and wrote the draft manuscript. All authors approved the final analysis and manuscript.

The research was funded in part by the National Institute for Health Research Health Protection Research Unit (NIHR HPRU) in Emergency Preparedness and Response at King's College London in partnership with Public Health England (PHE), in collaboration with the University of East Anglia, Norwich Medical School. The views expressed are those of the author(s) and not necessarily those of the NHS, the NIHR, the Department of Health or Public Health England. Funders were not involved in designing the study.

We would like to thank all our research participants. We would especially also like to thank the InterEuropean Human Aid Association Germany e.V. for hosting us.

1. Connolly M, Gayer M, Ryan M, Salama P, Spiegel P, Heymann D. Communicable diseases in complex emergencies: impact and challenges. The Lancet. 2004;364(9449):1974-83. DOI: https://doi.org/10.1016/S0140-6736(04)17481-3

2. Gayer M, Legros D, Formenty P, Connolly MA. Conflict and Emerging Infectious Diseases. Emerging Infectious Diseases. 2007;13(11):1625-31. DOI: https://doi.org/10.3201/eid1311.061093

3. Watson J, Gayer M, Connolly M. Epidemics after Natural Disasters. Emerging Infectious Diseases. 2007;13(1):1-5. DOI: https://doi.org/10.3201/eid1301.060779

4. Goma Epidemiology Group. Public health impact of Rwandan refugee crisis: what happened in Goma, Zaire, in July, 1994? Lancet. 1995;345:339-44. DOI: https://doi.org/10.1016/S0140-6736(95)90338-0

5. Ahmad K. Measles epidemic sweeps through Afghanistan. Lancet. 2000;355:1439. DOI: https://doi.org/10.1016/S0140-6736(05)74645-6

6. Diaz T, R A. Infectious diseases in a Nicaraguan refugee camp in Costa Rica. Trop Doct. 1989;19:14-7. DOI: https://doi.org/10.1177/004947558901900105

7. Hatch D, Waldman R, Lungu G, Piri C. Epidemic cholera during refugee resettlement in Malawi. Int J Epidemiol. 1994;23:1292-9. DOI: https://doi.org/10.1093/ije/23.6.1292

8. Mercer A. Mortality and morbidity in refugee camps in eastern Sudan: 1985-90. Disasters. 1992;16:28-42. DOI: https://doi.org/10.1111/j.1467-7717.1992.tb00373.x

9. Rowland M, Nosten F. Malaria epidemiology and control in refugee camps and complex emergencies. Annals of Tropical Medicine and Parasitology. 2001;95(8):741-54. DOI: https://doi.org/10.1080/00034983.2001.11813694

10. Paquet C. Vaccination in emergencies. Vaccine. 1999;17(SUPPL. 3):S116-S9. DOI: https://doi.org/10.1016/S0264-410X(99)00305-9

11. Santaniello-Newton A, Hunter P. Management of an outbreak of meningococcal meningitis in a Sudanese refugee camp in Northern Uganda. Epidemiol Infect 2000;124:75-81. DOI: https://doi.org/10.1017/S0950268899003398

12. Salama P, Spiegel P, Talley L, Waldman R. Lessons learned from complex emergencies over past decade. The Lancet. 2004;364(9447):1801-13. DOI: https://doi.org/10.1016/S0140-6736(04)17405-9

13. Talley L, Spiegel P, Girgis M. An investigation of increasing mortality among Congolese refugees in Lugufu camp, Tanzania, May-June 1999. J Refug Stud. 2001;14:412-27. DOI: https://doi.org/10.1093/jrs/14.4.412

14. Toole M. Mass population displacement. A global public health challenge. Infectious Disease Clinics of North America. 1995;9(2):353-66.

15. Toole M, Waldman R. The Public health Aspects of Complex Emergencies and Refugee Situations. Annual Review of Public Health. 1997;18:283-312. DOI: https://doi.org/10.1146/annurev.publhealth.18.1.283

16. Toole M, Waldman R. An analysis of mortality trends among refugee populations in Somalia, Sudan, and Thailand. Bull World Health Organ. 1988;66:237-47.

17. Ahern M, Kovats R, Wilkinson P, Few R, Matthies F. Global health impacts of floods: epidemiologic evidence. Epidemiological Review. 2005;27:36-46. DOI: https://doi.org/10.1093/epirev/mxi004

18. Few R, Ahern M, Matthies F, Kovats R. Floods, health and climate change: a strategic review. Norwich: Tyndall Centre for Climate Change, University of East Anglia; 2004.

19. WHO. Diptheria outbreak response update Cox's Bazar, Bangladesh 27 December 2017. Geneva: WHO; 2017.

20. WHO. Diphtheria - Cox's Bazar in Bangladesh. Geneva: WHO; 2017.

21. WHO. WHO Weekly Situation Report #9. Geneva: WHO; 2018.

22. ReliefWeb. Bangladesh: Diphtheria outbreak. New York: ReliefWeb; 2018.

23. Hammer C, Brainard J, Hunter P. Risk factors and risk factor cascades for communicable disease outbreaks in complex humanitarian emergencies: A qualitative systematic review. BMJ Global Health. 2018;3(4):e000647. DOI: https://doi.org/10.1136/bmjgh-2017-000647

24. Hammer C, Brainard J, Innes A, Hunter P. (Re-) Conceptualising vulnerability as a part of risk in global health emergency response: Updating the pressure and release model for global health emergencies. Emerging Themes in Epidemiology. 2019;16(2):1-8. DOI: https://doi.org/10.1186/s12982-019-0084-3

25. Hammer C, Brainard J, Hunter P. Risk factors for communicable diseases in humanitarian emergencies and disasters: Results from a three-stage expert elicitation. Global Biosecurity. 2019;1(1):1-14. DOI: https://doi.org/10.31646/gbio.5

26. WHO. Outbreak surveillance and response in humanitarian emergencies. WHO guidelines for EWARN implementation. Geneva: WHO; 2012.

27. WHO. Communicable disease risk assessment: protocol for humanitarian emergencies. Geneva: WHO; 2007.

28. Sim J, Saunders B, Waterfield J, Kingstone T. Can sample size in qualitative research be determined a priori? International Journal of Social Research Methodology. 2018. DOI: https://doi.org/10.1080/13645579.2018.1454643

29. Messick S. Validity of Test Interpretation and Use. In: Alkin M, editor. Encyclopedia of Educational Research. 6 ed. New York: MacMillan; 1992.

30. Allen M, Yen W. Introduction to Measurement Theory. 1 ed. Prospect heights: Waveland Press Inc.; 2001.

31. Gwet K. Handbook of Inter-Rater Reliability (3rd Edition): The Definitive Guide to Measuring the Extent of Agreement Among Multiple Raters. 3 ed. Oakland: University of California Press; 2012.